I am glad that Apple released a new device last week. It was a refreshing change from what most IT discussions are about recently. And what’s topic is most discussed? AI, of course.

And for good reason! There’s lots and lots happening in this space. New AI technology is coming out. New uses for AI are developed. It’s an exciting space. Like many, I am having a hard time keeping it with it all. But try and keep up I must. And as I do, I have found some interesting links for me (and you) to read:

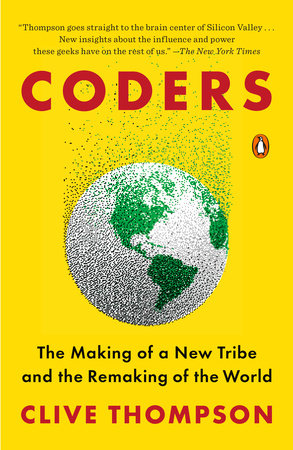

Clive Thompson has a grim take on the boring apocalypse of today’s AI

Also grim is this story in WiReD about tessa, the eating disorder chatbot, and why it had to be suspended. Don’t leave your AI unattended!

Grimly funny: what happens when a lawyer misuses ChatGPT? Hijinx insue!

Not grim, but clever: A Vienna museum turned to AI and cats — yes AI and cats — to lure visitors.

Also in WiReD is this thoughtful piece on how non english languages are being left out of the AI revolution, at least for now. I see this changing really fast.

A good New York Times piece on how training chatbots on smaller language datasets could make them better.

Fascinating to see how much AI is listed in Zapier’s app tips here.

Also fascinating: Google didn’t talk about any of their old AI while discussing their new AI during their I/O 2023 event recently. I wonder why. I wonder if they’re missing an opportunity.

AI junk: Spotify has reportedly removed tens of thousands of ai generated songs. Also junk, in a way: AI interior design. Still more garbage AI uses, this time in the form of spam books written using ChatGPT.

This seems like an interesting technology: liquid neural networks.

What is falcon 40b? Only “the best open-source model currently available. Falcon-40B outperforms LLaMA, StableLM, RedPajama, MPT, etc. ” Worth a visit.

Here’s a how-to on using AI for photo editing. Also, here’s some advice on writing better ChatGPT prompts.

This is a good use of AI: accurately diagnosing tomato leaf diseases.

For those that care: deep learning pioneer Geoffrey Hinton quit Google.

Meanwhile Sam Altman is urging the US congress to regulate AI. In the same time period, he threatens to withdraw from Europe if there is too much regulation, only to back down. It seems like he is playing people here. Writers like Naomi Klein are rightly critical. Related is this piece: Inside the fight to reclaim AI from Big Tech’s control | MIT Technology Review.

Here’s another breathless piece on the AI start up scence in San Francisco. Yawn. Here’s a piece on a new startup with a new AI called Character.ai that lets you talk to famous people. I guess….

Here’s some things my company is doing with AI: Watsonx. But also: IBM to pause hiring for back office jobs that ai could kill. Let’s see about that.

Finally, this story from BlogTO on how “Josh Shiaman, a senior feature producer at TSN, set out to create a Jays ad using text-to-video AI generation, admitting that the results “did not go well.”” Not go well is an understatement! It’s the stuff of nightmares! 🙂 Go here and see.

In some ways, maybe that video is a good metaphor for AI: starts off dreamy and then turns horrific.

Or maybe not.

Yep, it’s true. If you have some technical skill, you can download this repo from github: rasbt/LLMs-from-scratch: Implementing a ChatGPT-like LLM in PyTorch from scratch, step by step and build your own LLM.

Yep, it’s true. If you have some technical skill, you can download this repo from github: rasbt/LLMs-from-scratch: Implementing a ChatGPT-like LLM in PyTorch from scratch, step by step and build your own LLM.

I have been arguing recently about the limits of the current AI and why it is not going to take over the job of coding yet. I am not alone in this regard. Clive Thompson, who knows a lot about the topic, recently wrote this:

I have been arguing recently about the limits of the current AI and why it is not going to take over the job of coding yet. I am not alone in this regard. Clive Thompson, who knows a lot about the topic, recently wrote this:

/cdn.vox-cdn.com/uploads/chorus_asset/file/23996930/girl_with_a_pearl_earring.jpeg)