AI a year ago was mostly talking about AI. AI today is about what to do with the technology.

AI a year ago was mostly talking about AI. AI today is about what to do with the technology.

There are still good things being said about AI. This in depth piece by Navneet Alang here in the Walrus was the best writing on AI that I’ve read in a long time. And this New York Times piece on the new trend of AI slop got me thinking too. But for the most part I’ve stopped reading pieces on what does AI mean, or gossip pieces on OpenAI.

Instead I’ve been focused on what I can do with AI. Most of the links that follow reflect that.

Tutorials/Introductions: for people just getting started with gen AI, I found these links useful: how generative AI works, what is generative AI, how LLMs work, best practices for prompt engineering with openai api, a beginners guide to tokens, a chatGPT cheat sheet, what are generative adversarial networks gans, demystifying tokens: a beginners guide to understanding AI building block, what are tokens and how to count them, how to build an llm rag pipeline with llama 2 pgvector and llamaindex and finally this: azure search openai demo.

Software/Ollama: Ollama is a great tool for experimenting with LLMs. I recommend it to anyone wanting to do more hands on with AI. Here’s where you can get it. This will help you with how to set up and run a local llm with ollama and llama 2. Also this: how to run llms locally on your laptop using ollama. If you want to run it in Docker, read this. Read this if you want to know where Ollama stores it’s models. Read this if you want to customize a model. If you need to uninstall Ollama manually. you want this.

Software/RAG: I tried to get started with RAG fusion here and was frustrated. Fortunately my manager recommended a much better and easier way to get working with RAG by using this no-code/low-code tool, Flowise. Here’s a guide to getting started with it.

Meanwhile, if you want more pieces on RAG, go here, here, here, here, here, here, here, here, here, here, here, here, here, here, here, here, here, here, here, and here. I know: it’s a lot. But I found those all those useful, and yes, each “here” takes you to a different link.

Software/embedding: if you are interested in the above topics, you may want to learn more about vector databases and embeddings. Here are four good links on that: one two, three, four.

Software/models: relatedly, here’s four good links on models (mostly mixtral which I like alot): mixtral, dolphin 25 mixtral 8x7b, dolphin 2 5 mixtral 8x7b uncensored mistral , Mistral 7B Instruct v0.2 GGUF,plus a comparison of models.

Software/OpenAI: while it is great to use Ollama for your LLM work, you may want to do work with a SaaS like OpenAI. I found that when I was doing that, these links came in handy: how OpenAI’s billing works, info on your OpenAI api keys, how to get an OpenAI key, what are tokens and how to count them, more on tokens, and learn OpenAI on Azure.

Software/Sagemaker: here’s some useful links on AWS’s Sagemaker, including pieces on what is amazon sagemaker, a tutorial on it, how to get started with this quick Amazon SageMaker Autopilot, some amazon sagemaker examples , a number of pieces on sagemaker notebooks such as creating a sagemaker notebook, a notebooks comparison, something on distributed training notebook examples and finally this could be helpful: how to deploy llama 2 on aws sagemaker.

Software in general: these didn’t fit any specific software category, but I liked them. There’s something on python and GANs, on autogen, on FLAML, on python vector search tutorial gpt4 and finally how to use ai to build your own website!

Prompt Engineering: if you want some guidance on how best to write prompts as you work with gen AI, I recommend this, this, this, this, this, this, this, and this.

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25193260/microsoft_copilot_app_logo.png)

IT Companies: companies everywhere are investing in AI. Here’s some pieces on what Apple, IBM, Microsoft and…IKEA…are doing:

Apple: Microsoft copilot app is available for the iphone and ipad.

IBM: Here’s pieces on ibm databand with self learning for anomaly detection; IBM and AI and the EI; IBM’s Granite LLM; WatsonX on AWS; installing watsonX; watsonx-code-assistant-4z; IBM Announces Availability of Open Source Mistral AI Model on watsonx; IBM’s criteria for adopting gen AI ;probable root cause accelerating incident remediation with causal AI; Watsonx on Azure; Watsonx and litellm; and conversational ai use cases for enterprises

IKEA: here’s something on the IKEA ai assistant using chatgpt for home design.

Microsoft: from vision to value realization – a closer look at how customers are embracing ai transformation to unlock innovation and deliver business outcomes, plus an OpenAI reference.

Hardware: I tend to think of AI in terms of software, but I found these fun hardware links too. Links such as: how to run chatgpt on raspberry pi; how this maker uses raspberry pi and ai to block noisy neighbors music by hacking nearby bluetooth speakers; raspberry pi smart fridge uses chat gpt4 to keep track of your food. Here’s something on the rabbit r1 ai assistant. Here’s the poem 1 AI poetry clock which is cool.

AI and the arts: AI continues to impact the arts for ways good and bad. For instance, here’s something on how to generate free ai music with suno. Relatedly here’s a piece on gen ai, suno music, the music industry, musicians and copyright. This is agood piece on artists and AI in the Times. Also good: art that can be easily copied by AI is meaningless, says Ai Weiwei. Over at the Washington Post is something on AI image generation. In the battle with AI, here’s how artists can use glaze and nightshade to stop ai from stealing your art. Regarding fakes, here’s a piece on Taylor Swift and ai generated fake images. Speaking of fake, here’s something on AI and the porn industry. There’s also this piece on generative ai and copyright violation.

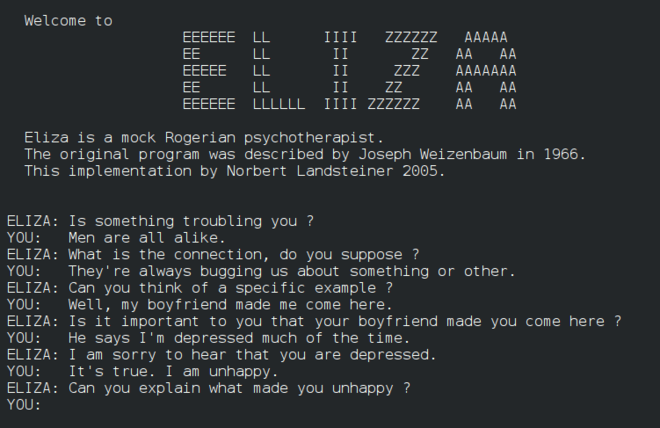

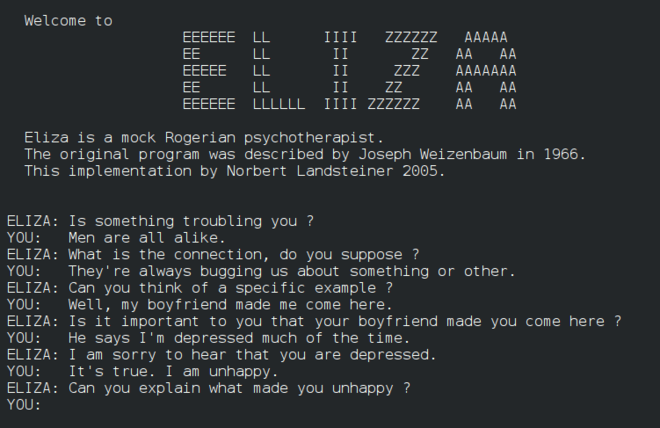

Finally: I was looking into the original Eliza recently and thought these four links on it were good: one, two, three and four. Then there’s these stories: on AI to help seniors with loneliness, the new york times / openai/ microsoft lawsuit, another AI lawsuit involving air canada’s chatbot. stunt AI (bot develop software in 7minutes instead of 4 weeks) and a really good AI hub: chathub.gg.

Whew! That’s a tremendous amount of research I’ve done on AI in the last year. I hope you find some of it useful.

AI a year ago was mostly talking about AI. AI today is about what to do with the technology.

AI a year ago was mostly talking about AI. AI today is about what to do with the technology.

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25193260/microsoft_copilot_app_logo.png)

With the rise of AI, LLMs, ChatGPT and more, a new skill has risen. The skill involves knowing how to construct prompts for the AI software in such a way that you get an optimal result. This has led to a number of people to start saying things like this:

With the rise of AI, LLMs, ChatGPT and more, a new skill has risen. The skill involves knowing how to construct prompts for the AI software in such a way that you get an optimal result. This has led to a number of people to start saying things like this: