For fun I decide to see if I am ready to be replaced with AI via ChatGPT. Part of my work is building cloud based infrastructure using a language called HCL from Hashicorp using a tool called Terraform. You write one or more program files using HCL, run it through Terraform, and voila, you have the infrastructure you need.

For this exercise I entered the following prompt:

Create a terraform file for IBM cloud that will provision a VPC, set up networking for the VPC, and provision a virtual server for VPC. It will have 1 security group and a rule for this security group to allow SSH connection to your virtual server instance, 1 subnet to enable networking in your VPC, 1 VPC virtual server instance and 1 floating IP address that you use to access your VPC virtual server instance over the public network.

A good prompt, I thought. Nothing vague or conflicting. Practically a form of code itself.

I was shocked when in a few seconds I got this response:

To provision a VPC, networking, and a virtual server with a security group and a floating IP address on IBM Cloud, you can use the following Terraform configuration file:

# Configure the IBM provider

provider “ibm” {

generation = 2

region = “us-south”

}# Create a VPC

resource “ibm_is_vpc” “vpc” {

name = “my-vpc”

address_prefix_management = “auto”

}

# Create a subnet in the VPC

resource “ibm_is_subnet” “subnet” {

name = “my-subnet”

vpc = ibm_is_vpc.vpc.id

ipv4_cidr_block = “10.240.0.0/24”

}

# Create a security group

resource “ibm_is_security_group” “security_group” {

name = “my-security-group”

vpc = ibm_is_vpc.vpc.id

description = “Allow SSH inbound traffic”

}

# Add an SSH rule to the security group

resource “ibm_is_security_group_rule” “ssh_rule” {

security_group = ibm_is_security_group.security_group.id

direction = “inbound”

protocol = “tcp”

port_min = 22

port_max = 22

remote = “0.0.0.0/0”

}

# Create a virtual server instance in the subnet

resource “ibm_is_instance” “instance” {

name = “my-instance”

image = “ibm-centos-7-10-minimal-amd64-2”

profile = “bx2-2×8”

primary_network_interface {

subnet = ibm_is_subnet.subnet.id

}

vpc = ibm_is_vpc.vpc.id

security_groups = [ibm_is_security_group.security_group.id]

}

# Create a floating IP address

resource “ibm_is_floating_ip” “floating_ip” {

name = “my-floating-ip”

}

# Associate the floating IP address with the virtual server instance

resource “ibm_is_floating_ip_attachment” “attachment” {

floating_ip = ibm_is_floating_ip.floating_ip.address

instance = ibm_is_instance.instance.id

}

Before running the above code, replace with your IBM Cloud API key and and with the appropriate values for your IBM Cloud region and availability zone.

Once you have saved this configuration file, you can initialize Terraform by running terraform init, and then create the resources by running terraform apply. After a few minutes, Terraform will create the VPC, subnet, security group, virtual server, and floating IP address. You can then use the floating IP address to SSH into your virtual server instance over the public network.

Wow! What an amazing response, I thought! It was quick, detailed, seemingly knowledgeable. Also…wrong.

There was so much fundamentally wrong with the code that the only way to get it to work was to rewrite much of it and also add a bunch of new code to make it work. I can’t even tell you how it came up with some of the statements: they are not defined that way for Terraform specs for IBM Cloud.

I even had the AI regenerate the code to see if it could get it right the second time. Instead the new code had 9 errors in it.

Fine. My manager provided me with a prompt of his own: see if it will work on AWS. (Good prompt, Ted!)

I did try it on AWS and Azure. With Azure the response was an incomplete script. (At least the IBM script was complete, though wrong.) With AWS the script was better. I could enter terraform plan and terraform thought it looked good. But once I entered terraform apply to build the resources, it failed.

I suspect the larger problem is lack of sufficient overlapping data for the AI tools to train on. So it sort of gets the code right, but sort of isn’t really good enough.

I see people on the Internet raving about how well AI is doing writing code. Some of the examples are interesting, but I think it has a way to go. I’ll stick to doing my day job. Without AI to help. 🙂

If you do anything with AI, then knowing how to write a good prompt is essential. It doesn’t matter if you’re doing complex vibe coding or merely sending a question to ChatGPT or Google Gemini or Microsoft CoPilot, the prompt you use is the key to getting good responses.

If you do anything with AI, then knowing how to write a good prompt is essential. It doesn’t matter if you’re doing complex vibe coding or merely sending a question to ChatGPT or Google Gemini or Microsoft CoPilot, the prompt you use is the key to getting good responses.

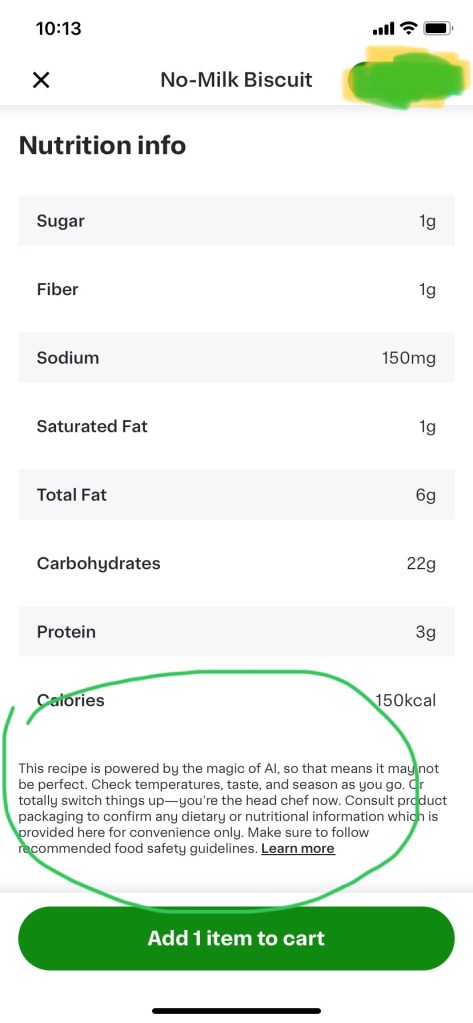

Food bloggers are seeing a drop in traffic as home cooks turn to AI, according to

Food bloggers are seeing a drop in traffic as home cooks turn to AI, according to

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/24390468/STK149_AI_Chatbot_K_Radtke.jpg)

Yep, it’s true. If you have some technical skill, you can download this repo from github:

Yep, it’s true. If you have some technical skill, you can download this repo from github:

I have been arguing recently about the limits of the current AI and why it is not going to take over the job of coding yet. I am not alone in this regard. Clive Thompson, who knows a lot about the topic, recently wrote this:

I have been arguing recently about the limits of the current AI and why it is not going to take over the job of coding yet. I am not alone in this regard. Clive Thompson, who knows a lot about the topic, recently wrote this: